Cognitive Complexity and Degree Planning: Student Perceptions of and Needs From Technology Tools

Melinda Mechur Karp1* & Ciji Heiser2

1Phase Two Advisory

2American University

Abstract

Colleges around the country have adopted technology tools to simplify planning and registration processes and provide interventions to support students’ timely completion. However, there is little evidence of these tools’ efficacy in improving student progression and completion (c.f., Rossman et al., 2021; Velasco et al., 2020). The minimal impact of advising and planning technologies on student success is amplified among institutions supporting the students who make up the new majority of postsecondary learners—those who are Black, Latine, Indigenous, and/or low-income (BLI/LI). One possible reason for this is that such tools were not designed with the needs of this population in mind. Using process map and focus group data from students at two broad-access universities, coupled with interview data from institutional stakeholders, this study interrogates the ways that BLI/LI students engage in academic planning. We find that this process is more cognitively complex and nuanced than is typically acknowledged; program planning tools do not include the information BLI/LI students require; and low-income college students are particularly disadvantaged by these tools. As such, we find support for the hypothesis that current tools’ efficacy is muted because they do not center the needs of BLI/LI students in their design.

Keywords: advising, advising technologies, first-generation college students, Black/Latine/Indigenous college students

* Contact: melinda@phasetwoadvisory.com

© 2024 Karp & Heiser. This open access article is distributed under a Creative Commons Attribution 4.0 License (https://creativecommons.org/licenses/by/4.0/)

Cognitive Complexity and Degree Planning: Student Perceptions of and Needs From Technology Tools

Al1 is a college student in Ohio. He is from a low-income family, and is an emancipated minor. Like most college students today, he uses degree planning and scheduling tools to help him select, enroll in, and keep track of the classes he needs to take on his way to graduation. Despite his understanding of these tools, he calls course planning and registration “headache inducing.” He has had trouble registering for the right courses at the right times, and his graduation has been delayed.

Al is not unique. Colleges around the country have adopted various technology tools for academic planning, scheduling, and early alerts (Galanek et al., 2018). These tools aim to help students seamlessly identify the courses they need to graduate and avoid courses that will not count as a requirement. They also help students build multisemester plans so that they can have a clear path to completion; help advisors communicate with their students; provide early alerts to intervene and support student success; and serve as a degree audit to ensure graduation requirements are met. The growth in planning and scheduling tools comes, in part, from research showing that student progression and completion is hindered by inaccurate degree planning (Bailey et al., 2015; Zeidenberg, 2012). By simplifying the planning and registration process, academic planning tools are assumed to enable more effective “self-advisement” by students, thereby reducing the burden on overcapacity advising teams.

And yet, as Al’s story shows, these products have not yet lived up to their promise. This is particularly true for students, like Al, who are the “new majority”—Black, Latine, Indigenous, and/or low-income (BLI/LI)—for whom college was not designed (Thelin, 2019). A recent study of broad-access community colleges and universities, which typically enroll large proportions of new-majority students, found minimal impact of degree planning and early alert software on completion rates (Velasco et al., 2020). One possible reason for this finding is that degree planning tools and advising processes were not designed or implemented in ways that center the needs of BLI/LI students and thus do not effectively support their academic success.

This qualitative study interrogates this hypothesis by directly asking BLI/LI students at broad-access institutions to identify their needs when engaging in planning and scheduling processes, and to share how current tools do and do not meet their needs. We contextualize students’ experiences with insights gleaned from higher education professionals who purchase, adopt, implement, and maintain such tools at broad-access institutions, including advisors, IT professionals, and university registrars. Our analysis indicates that current tools do not meet the needs of new-majority students, thus likely muting the tools’ impact. We conclude with a call to redesign tools with student voice and needs at the center and provide concrete suggestions regarding what this might look like.

Literature Review

The population of the United States is growing increasingly diverse, as is the population enrolling in postsecondary institutions (Espinosa et al., 2019). While enrollment trends in postsecondary institutions are shifting along with the national demographic, research has found considerable differences in outcomes by race: Black students have the lowest persistence rates and highest undergraduate dropout rates among demographic groups, and educational outcomes for Indigenous students are also low, although data on these students lack precision (Espinosa et al., 2019).

The causes of student non-completion are complex, but there is ample evidence that degree planning, excess crediting, and advising are among the key contributors. Research conducted by Bailey et al. (2015) and Zeidenberg (2012) found student progression and completion is hindered by inaccurate degree planning. Studies indicate that on-time degree completion is more likely to occur when students enroll in key courses and accrue credits that count towards graduation (Belfield et al., 2019) and when students avoid “over-crediting”—that is, earning more credits than required for their degree (Zeidenberg, 2012). Effective degree planning supports both of these mechanisms by making sure that students take the right courses at the right time, while avoiding courses that will not support progress towards earning the degrees they seek.

Both students and institutional actors believe that academic advising, supported course selection, and academic planning are essential to student success (Bharadwaj et al., 2023). Moreover, students themselves indicate that effective academic advising and proactive support are critical in helping them feel connected to an institution of higher education and are integral in their decisions around whether or not to remain enrolled (Bharadwaj et al., 2023; Kalamkarian et al., 2020; Karp et al., 2021). One study conducted by a technology provider shows that academic advising and degree planning programs are particularly important for BLI student success (Civitas Learning, 2020).

Given that 61% of colleges and universities identify success imperatives and data-driven decision making as trends at their institutions (Grama & Brooks, 2018), there is a growing movement of using technology to address advising challenges. Tools have been developed and launched under the assumption that by providing accurate, easily accessible information, technology solutions can reduce excess credit-taking while enabling advisors to identify and proactively intervene with students who are off the path to graduation with systems that have early alert features. Moreover, designers and implementers hope that these tools will increase equitable outcomes across student demographics.

The success of these efforts have been underwhelming, however. Velasco et al. (2020) found null effects to the implementation of advising and planning tools in two- and four-year institutions. In a review of available studies meeting their most rigorous What Works Clearinghouse standards, the U.S. Department of Education Sciences also found no positive impact of technology-mediated advising or technology-based advising tools on student outcomes (Karp et al., 2021). Two other large-scale randomized control trials of current advising tools, including program planning and tracking tools, found similar null impacts (Mayer et al., 2019; Rossman et al., 2021). In addition, Pellegrino et al. (2021) found that while institutions are employing advising technology like early alert and case management systems, they struggle to leverage them effectively and to connect the information contained in these systems to degree planning and completion efforts.

The minimal impact of advising and advising technologies on student success is amplified among institutions supporting BLI/LI students. Studies of advisors and students both find limited student access to (Shaw et al., 2021b) and engagement with (Shaw et al., 2021a) advising technologies as a key challenge. These concerns are stronger at institutions enrolling high percentages of students who are low-income, as compared to those enrolling higher proportions of high-income students (Shaw et al., 2021a). In other words, institutions with high numbers of Pell-eligible students are less likely to engage in high-impact advising practices (Shaw et al., 2021a).

Research also shows that students have inequitable access to advising technologies (Shaw et al., 2021b). Students who may most need the support of technologically assisted advising and degree planning may not have ready access to such technologies. A 2020 national study of college students, for example, found that more than 1 in 6 reported internet accessibility challenges and/or hardware and software challenges that interfered with their learning (Means & Neisler, 2021). BLI/LI students reported a greater level of challenge than their majority and wealthier peers.

There are many possible reasons why advising technologies do not support BLI/LI students, but one is that the available technologies do not meet the actual needs of these student groups. In fact, survey data indicates that this might be the case. In one national survey of higher education practitioners, barely a majority of those at institutions serving primarily students of color responded that they believe their institution “understands the aspirations and experiences of students and uses that information to design and provide culturally-responsive student supports” (Shaw et al., 2021b).

Theoretical Framework

This study seeks to interrogate why advising and planning technologies have not improved student outcomes for BLI/LI students. We take a critical and student-centered approach, hypothesizing that if advising programs and technologies are to be more effective, they need to be considered, implemented, maintained, and leveraged by designers and back-end users to support the success of new-majority BLI/LI students. As such, we ground our inquiry in critical qualitative and culturally-responsive evaluation theories.

In the context of a culturally diverse and changing demographic population, using perspectives that explore the intersections of knowing, being, and acting to understanding the reasons underlying observed phenomena, such as unexpectedly muted impacts of technology, are particularly useful (Carspecken, 2012). Critical perspectives focus on power; discourse that shapes daily life; race, gender, and socioeconomic identities; and the intersection of these identities with the structures affecting historically underserved groups (Cannella & Lincoln, 2012). Culturally responsive approaches seek to explore and understand culture as a part of the assessment and evaluation enterprise by centering culture and cultural perspectives.

These perspectives challenge us to unpack implicit assumptions inherent in educational interventions, such as technology development. Former president of the American Evaluation Association and culturally responsive evaluator Kirkhart (2010) writes, “When it is not visibly identified, the default operating is a dominant majority perspective. Persons with non-majority identification become distanced or treated as ‘other,’ often with oppressive consequences” (p. 402). We hypothesize that centering BLI/LI student perspectives will help us uncover the nuanced and potentially hidden reasons for the null impacts of technology-mediated advising and course planning observed in the literature.

Given these frameworks, our inquiry’s data collection methods and analytic approach, as described in the next section, are driven by an attempt to elicit the experiences of BLI/LI students themselves; an acknowledgment of the many ways that identities show up and intersect with one another and with institutional structures; efforts to engage with students in culturally-responsive and empathetic ways; and to take as default the realities described by participants rather than default to the assumptions inherent in technology tools’ design. Our study’s hypothesis—that existing technologies have not typically been built with the needs of broad-access higher education institutions and their students in mind and thus cannot effectively meet their needs—is grounded in a critical interrogation of the taken-for-granted usefulness of educational technology tools.

Research Design and Methodology

This study seeks to understand why technology-enabled approaches to academic advising and degree planning show minimal impact for new-majority students. Building on findings that institutional actors do not believe these tools understand and address the aspirations and experiences of BLI/LI students, we explore how the students themselves experience technology-enabled degree planning process. We start with the assumption that centering BLI/LI students’ experiences and challenges with degree planning and tracking processes and technologies will generate a root-cause understanding of how future approaches can address the authentic needs of new-majority students and the institutions serving them. Finally, we hypothesize that current null outcomes from degree planning products stem from the fact that they do not address the actual needs of the students they seek to support.

The research questions guiding the study asked:

- 1. How do end-users (students and advisors) engage with and experience current degree planning and audit tools?

- 2. What pain points do end-users encounter with current tools and what implications do those pain points have for student persistence and completion?

- 3. How can technology tools, technology-related processes, and campus cultures be refined to better meet student needs while being attentive to broad-access institutions’ constraints?

We used qualitative approaches to data collection to explore student and back-end user perceptions in a way that was respectful and inclusive. We selected qualitative approaches because, “in many qualitative studies, the real interest is in how participants make sense of what has happened (itself a real phenomenon), and how this perspective informs their actions, rather than in determining precisely what happened or what they did” (Maxwell, 2013, p. 81). This study is not about students acting in any way or describing their actions but rather, this study is about exploring students’ thought processes around their experiences in college using technology tools to plan and register for courses that would keep them on track to graduation.

Participant Recruitment and Study Sample

This paper draws on a robust dataset drawn from two broad-access, four-year public institutions. Institutions were selected because (a) they were actively exploring ways to improve advising and advising technologies on their campuses; (b) they were diverse in location and student demographics; (c) they were public and broad-access, thereby generally representative of the types of institutions in which new-majority students enroll; and (d) they were willing to partner with the researchers to support data collection activities.

One institution was located in the Midwest, and enrolls approximately 11,000 students, 24% of whom identify as racially-minoritized, and 41% of whom are Pell-eligible. The other institution was located in a northeast urban center and is part of a multi-institution system. It enrolls approximately 15,000 students, 85% of whom identify as racially-minoritized, and 52% of whom are Pell-eligible.

Both institutions assign students to advisors, but the extent to which students were required to meet with their advisors varied, based on students’ majors and class year. Advisor caseloads varied across and within the institutions, as well, with some advisors serving upwards of 600 students and others serving many fewer. Appendix A provides additional information about the two institutions.

This study was approved by the IRB at both institutions. For all data collection procedures, we were careful to ensure participant confidentiality and voluntary participation. We were particularly cognizant of the fact that we (a) were asking students to discuss potentially uncomfortable questions around race and class and (b) for advisors and staff, we were asking them about their places of employment. We were also aware of our positionality as researchers; two of the three researchers engaged in data collection for this study are white, and all of us have advanced degrees. Our data collection methods acknowledged this by making all demographic disclosures (as well as participation) voluntary and by spending time at the beginning of each interview and focus group building rapport.

Our primary data are drawn from virtual focus groups conducted with students at both institutions during the spring of 2022. Representatives from each college helped us identify and recruit students who identified as BLI/LI. These representatives also sent recruitment information to the identified students, providing them with details about the study, their rights as potential participants, and instructions on how to participate. Students were offered a $50 gift card to participate in the focus group. In order to maintain confidentiality, participants registered for the study with the research team—not the college personnel who aided in recruitment. We spoke with 24 students across the two institutions. To contextualize and triangulate our student data, we conducted interviews with college personnel who work with advising technologies or serve as advisors at each institution. We spoke with 14 advisors and 13 additional personnel.

As part of the data collection, we asked students to provide self-identifying information, as they were comfortable. We prompted them to provide information about their race, gender/gender identity, language status, full/part-time enrollment status, financial aid status, and “anything else that may help us understand your experiences in college” as part of the process mapping described below. We also offered the opportunity for students to not self-disclose any demographic or descriptive information.

This approach to collecting information about the sample means we necessarily have imperfect descriptors. However, it was in line with our theoretical framework, which emphasizes the importance of elevating what individuals believe to be important, the intersectional nature of identity, and ensuring that non-majority identities are not framed as others or the default. We did not want to presume which identities might matter to students as they navigate course planning, nor did we want to create an environment where they felt compelled to disclose identities they were not comfortable sharing with outsiders.

As a result, students provided demographic information that was most salient to them, rather than predetermined categories. In many ways, we have a richer understanding of our sample than if we had used more traditional methods; students opted to disclose things we would not necessarily have asked about, such as their statuses as DACA recipients, members of the LGTBQIA+ community, or former foster youth.

Seventeen students self-identified as Black or Latine; 15 self-identified as low-income. Twelve students identified as female and eight identified as male. Nine students indicated they are first-generation college goers; eight work full- or part-time; at least one student was undocumented and ineligible for financial aid; and one student was a runaway/emancipated minor. Given that students were not required to disclose demographic information, these numbers may underrepresent BLI/LI representation in the dataset.

Focus Groups and Process Mapping

Focus groups are a tool used for the collection of qualitative data and are described by Nastasi and Hitchcock (2015) as, “interviews (typically semistructured) conducted with a small group of informants for the purpose of exploring specific topics, domains, constructs, beliefs, values or experiences from the perspectives of cultural members” (p. 75). We chose focus groups because they are well suited for working with new-majority students as they “allow for the opportunity to validate their experiences of subjugation and their individual and collective survival and resistance strategies” (Mertens, 2015, p. 383). For this study, focus groups offer two additional benefits. First, focus groups serve as an efficient means of data collection from multiple people (Nastasi & Hitchcock, 2015). A second benefit is that the interactions between group members provide additional insight beyond that collected in individual interviews (Mertens, 2015).

All focus groups in this study were conducted virtually, using video technology. Although virtual focus groups can carry some data collection risks (i.e., difficulty building rapport, less opportunity to observe nonverbal cues), we opted for virtual engagement for a number of reasons. First, meeting with students virtually allowed us more scheduling flexibility, enabling us to conduct focus groups over weeks rather than over a few condensed days of a site visit, as well as varying the time of day. Given the many obligations faced by students in this study, scheduling flexibility allowed us to maximize participation while respecting students’ time.

Second, data collection occurred in 2021 and while the COVID-19 pandemic was technically over, we were mindful of heightened concerns regarding health and safety. Meeting virtually allowed us to maintain safe distance and minimize participants’ exposure to others who may be ill. These heighted safety precautions were particularly important because so many of our participants were from communities disproportionately impacted by the pandemic.

The focus group itself had two parts, leading to two different types of data. During the first section, we guided our participants through a process mapping exercise. Process mapping is widely used in business to visually represent the steps individuals take while completing a task or series of steps. It is typically used to identify breakdowns or inefficiencies in a business process (Dietz et al., 2008). Process mapping allows for an in-depth examination of a single process, in this case, planning and registering for courses. Prior research in similar contexts of community colleges has recommended the use of process mapping to explore advising processes and degree completion (Karp et al., 2021; Wesley & Parnell, 2020).

We used virtual process mapping to collect visual representations of students’ experiences and practices when planning and registering for classes in order to capture detailed, step-by-step roadmaps taken by each student while using academic planning software to make gains towards degree completion. Using Google Slides, we guided focus group participants through the process by asking them to identify what they think about, what they do, and what resources they rely upon to plan their schedules and remain on track to graduation. We then asked them to array those steps into a visual that represents their planning and registration process. We also asked them to “annotate” their maps, identifying the aspects that were easiest and most challenging. Appendix B provides a sample of these maps.

During the second half of the focus group, we used a semistructured interview protocol to ask the students to reflect upon the mapping experience, their planning processes, and the tools available to them. We audio recorded and took detailed notes during these discussions, which were used to supplement, clarify, and extend the visuals students provided via their maps.

As noted, these data were collected towards the end of the COVID-19 pandemic. As a result, our response rates were lower and our no-show rates were higher than we had hoped for—a phenomenon experienced by many researchers during the same time period (see, for example, Rothbaum & Bee, 2022; LeBlanc et al., 2023). Readers should note that our sample may not include students most negatively impacted by the pandemic or who have the greatest caregiving and work responsibilities, as well as those with inconsistent access to technology. As such, our findings may actually underestimate the extent to which non-academic considerations play into students’ planning and registration processes.

Interviews

To capture back-end user perspectives and experiences we conducted virtual interviews with college personnel familiar with advising and degree planning technologies, including academic advisors, IT and IR staff, and registrars. Similar to focus groups, interviews are a tool for qualitative data collection that allow for in-depth exploration of a single individual’s perspective and experience on a given topic. Our college contacts helped us identify these personnel, but all interviews were scheduled and conducted by external members of the research team in order to preserve confidentiality.

These interviews used a semistructured protocol, and we took notes during the discussion. Advisor interviews focused on their use of planning and scheduling tools, perception of the tools and their usefulness for BLI/LI students, and suggested improvements of the tools. These interviews also provided context for the broader advising structure within which the tools are used. IT, IR, and registrar interviews focused on the constraints faced by broad-access institutions when implementing and deploying advising products, as well as suggestions for improvement.

Analysis

Student focus groups resulted in two types of data for analysis: the maps themselves, and the debrief conversation. We manually coded the process maps into the primary categories of the illustration: when and how students used advising and planning tools; the things they thought about, did, and used while creating course plans; their perceptions of advising and planning tools; and the challenges they faced when using those tools. We also thematically coded pain points and challenges, “trouble words,” and any written annotations. Once the initial coding was complete, we added in codes for students’ self-identified BLI/LI and first-generation status in order to identify any themes that were salient for subsets of new-majority students.

We took detailed notes during the debrief conversation and used those as our primary analytic source; however, we also voice-recorded the debrief and used the recording to fill in missing aspects of our notes, confirm direct student quotes, and parse contextual meaning as necessary. We used the notes to triangulate on the initial findings from our process map analysis, engaging in close reading to further understand the themes emerging from the process maps. For example, once we identified the cognitive complexity of program planning in the maps, we read the notes to understand how students describe that complexity, how they feel about it, and the implications it has for their decision-making processes and degree completion paths.

We also took detailed notes during the college personnel interviews. We analyzed the interview notes thematically, using them to triangulate on what we heard from students and to supplement our understanding of institutional constraints that could influence technology purchases, adoption, and implementation processes and practices.

Trustworthiness and interpretive reliability were achieved in a number of ways. First, researchers read the notes and identified potential coding schemes individually. During team meetings, we compared our potential codes and themes and identified those that resonated with the full group. Areas of disagreement were discussed until we could reach consensus on what appropriate codes and themes would be, given our respective reading of the data. These consensus codes and themes became the structure for our analysis.

Once we began coding, we again worked individually at first. During team meetings, we discussed tricky codes and contradictory or confusing thematic findings. Again, through discussion and close collective reading of the data, we came to consensus regarding how to resolve these analytic challenges. Finally, we ensured external validity by sharing early findings with key contacts at each college for member-checking and feedback, as well as during the report authorship phase when a fourth researcher joined the team and reread findings with fresh eyes.

Findings

Data analysis revealed three key findings that provide insight into why BLI/LI student progression has not increased even as colleges have adopted course planning tools. They provide insight into (a) the ways that students experience course planning as a cognitively complex process, (b) the need to supplement available tools to meet this cognitive load, and (c) the challenges identified by students when using existing degree planning tools. The analysis also revealed a fourth finding related to the ways that low-income students are uniquely challenged by the broader shortcomings of existing advising and degree planning processes and tools. Overall, student data provide support for our hypothesis that null impacts of degree planning technologies stem at least in part from those technologies’ inability to meet the actual needs of today’s new-majority postsecondary students.

Finding 1: Students Experience Course Planning as a Cognitively Complex Process

The students’ process maps and their semistructured reflections provided rich insight into how BLI/LI students approach course planning—and indicate that course planning, scheduling, and registration is a remarkably complex cognitive process. It is dynamic and multifaceted, reflecting the nuances of new-majority students’ day-to-day lives and priorities. Jesus, a Latino male who speaks Spanish at home and is an adult student, summed up this complexity by saying that when he logs into his planning tool, the first thing he thinks is, “Where do I even start?”

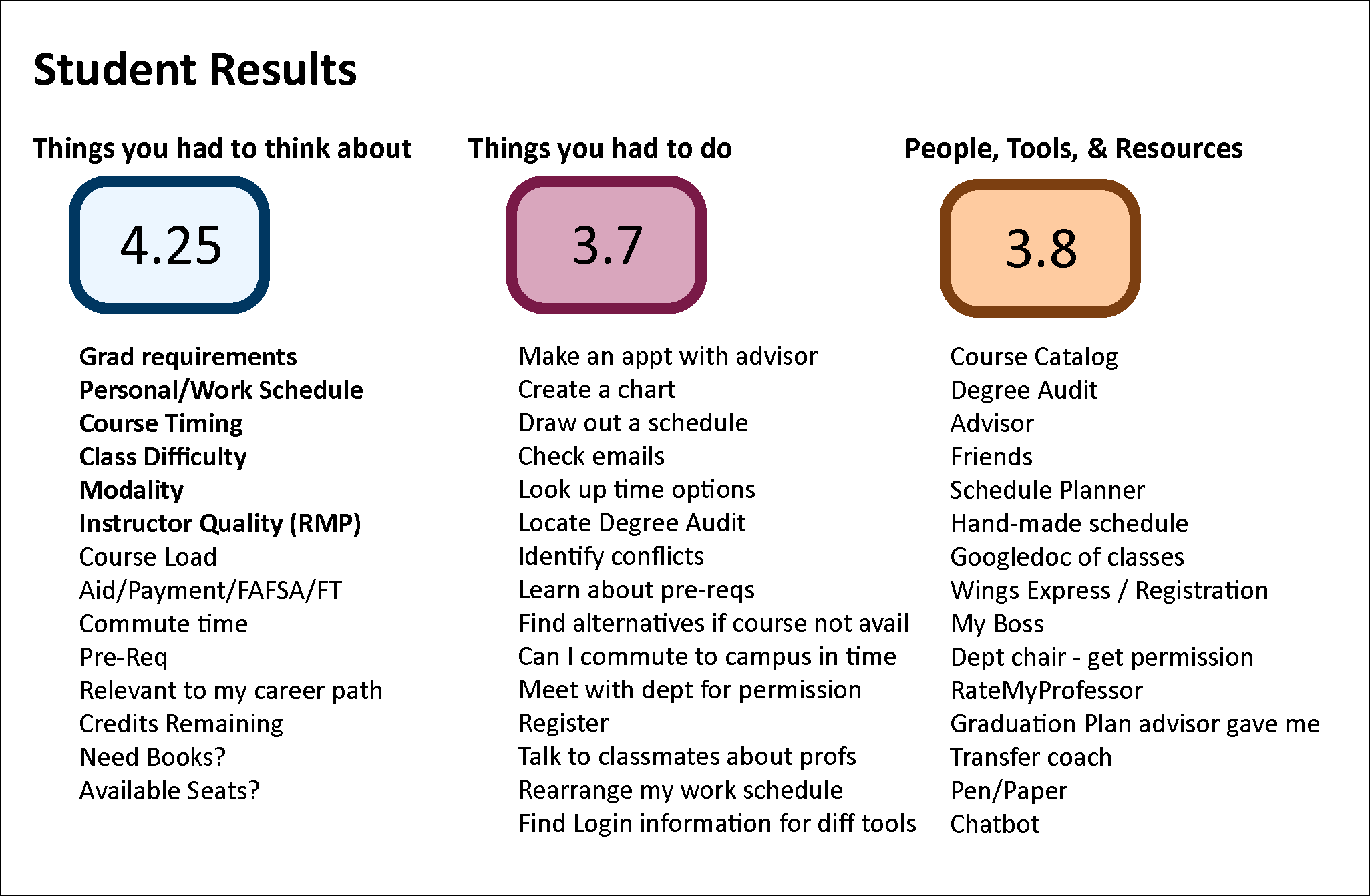

In analyzing the process maps, we found that, on average, students need to think about 4.25 things just to create a course plan for a single semester.2 Some students listed as many as nine. Students thought about obvious planning and scheduling concerns, such as meeting course requirements. But they also thought about things seemingly unrelated to degree progression including the quality of teaching in a given section, their work and family obligations, unexpected fees and costs, and whether or not they find a class interesting or relevant to their interests.

In fact, 21 of 24 of the students thought about graduation requirements. But 14 students also thought about their personal or work schedules; 11 thought about course timing, usually in relation to other obligations; and 10 thought about their course load. Overall, there were 28 mentions of graduation requirement-related “thinkings,” but 60 mentions of thinkings not directly related to graduation requirements. The cognitive complexity of program planning is further reflected in the number of steps students take (3.7, on average) and tools and information sources they access (3.8, with some students relying on as many as 7).

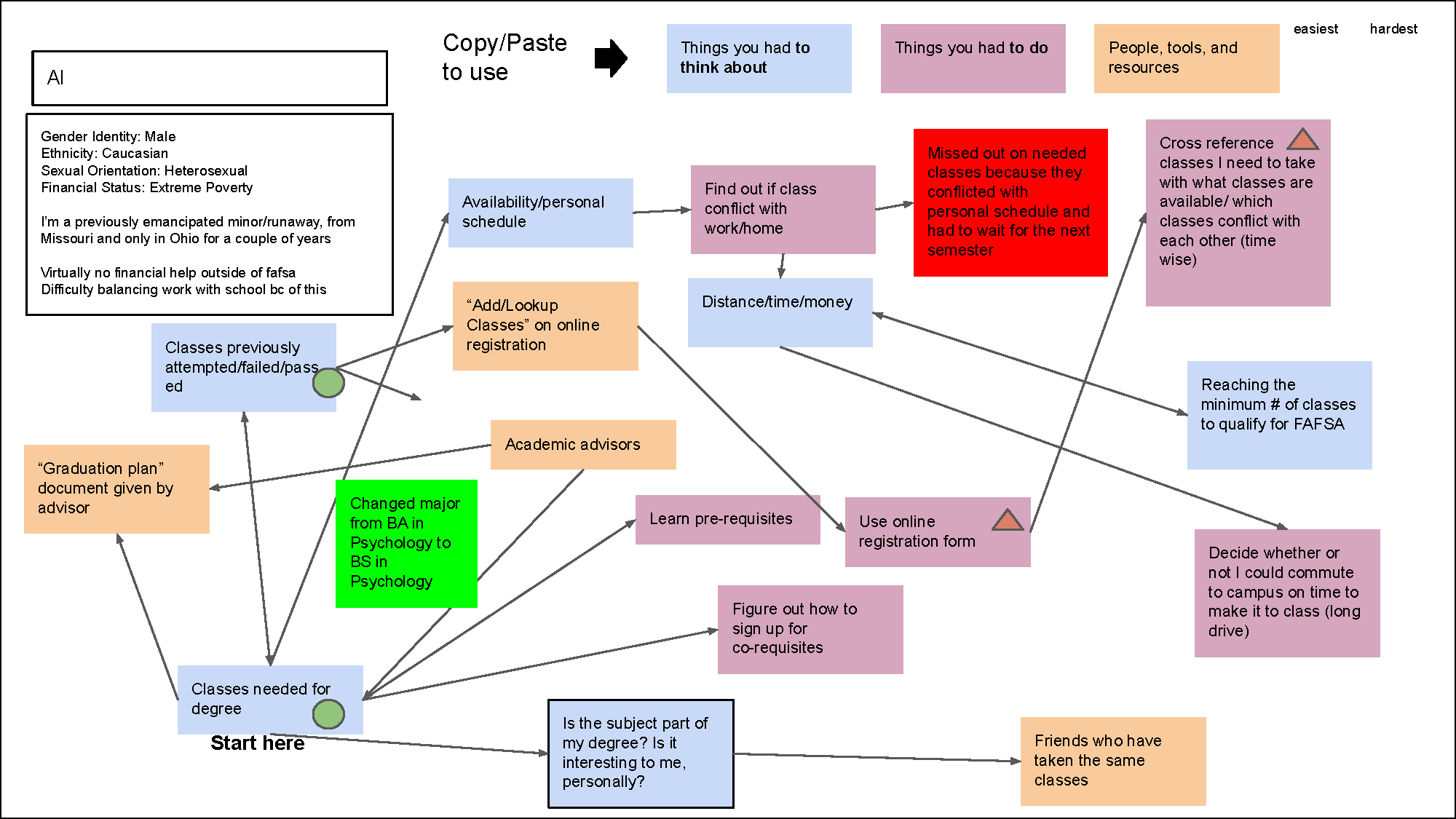

The complexity of the program planning and scheduling is evident in both students’ maps and their reflections on the mapping processes. Al, the student we met at the beginning of this paper, described his map by saying,

I made mine messy as an artistic statement. I find the process very confusing and frustrating and a lot of going back-and-forth between different documents . . . I was surprised at how much thinking goes into registering that we don’t think about . . .

If we look at his map (see Appendix B), we can see how there are multiple paths to registering for courses, and the number of considerations that have nothing to do with graduation requirements.

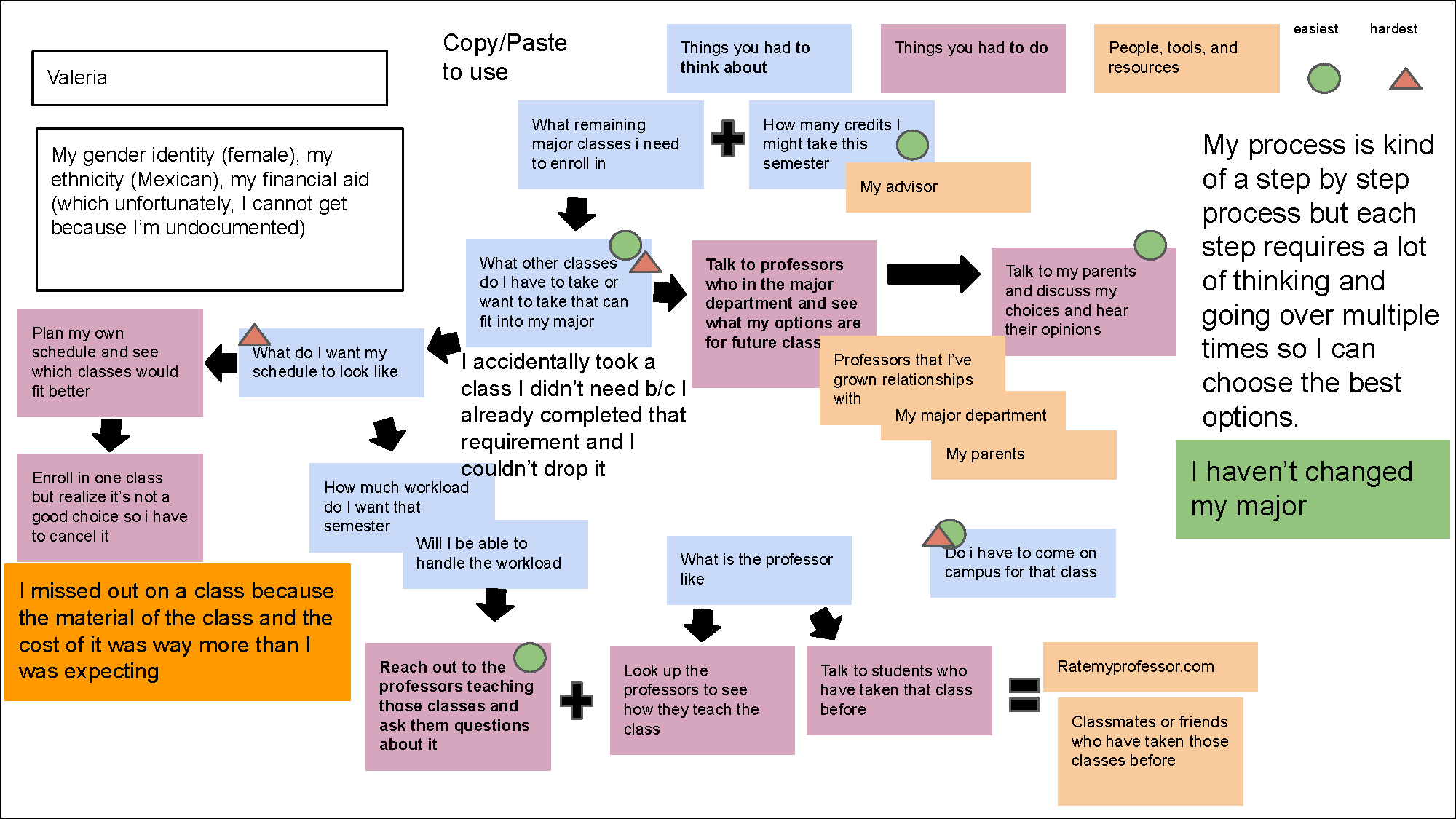

Valeria, a Chicana student who is undocumented and therefore ineligible for financial aid, reiterated the point that each step in the planning process requires cognitive complexity. (Her map is also shared in Appendix B). She said, “My process is kind of a step-by-step process but each step requires a lot of thinking and going over multiple times so I can choose the best options.” She noted that the available tools were useful since they showed what courses had been completed and “what to focus on next,” but also implied that there were many considerations outside of what was provided by those tools. In her map, she notes having to drop a class due to unexpected and unstated course fees, reaching out to professors to get additional information about courses, and using ratemyprofessors.com and speaking to others who have taken classes. These aspects of planning indicate that she needs information about the classes themselves—not just the requirements listed in the planning tool—to build an academic plan and schedule that will work for her.

That students consider many things when registering for classes is not a new insight. However, the quantification of this complexity via program maps is important—and as we will see, has implications for the efficacy of course planning software. The specific types of things students think about are the types of things they need software to address. Understanding how students themselves approach the cognitive task of course planning and registration is an important first step in building tools that meet their needs.

Finding 2: The Need to Supplement Program Planning Tools

Based on our analyses of the maps and semistructured interview data, students generally engage with advising and planning tools when it is time to register for classes or check their graduation status. By and large, they do not use the tools to create long-term academic plans or communicate with advisors about their academic progress, although those functionalities are available. Instead, students log in to check the classes they need, when they are offered, and whether or not a given course meets their requirements. In other words, students engage with planning tools in ways that are task-oriented.

Students find that the tools are adequate for these basic scheduling purposes. They felt like the tools did what they were supposed to do—give them insight into the classes they had taken and what they needed to take, and provide an avenue for registering for the next semester. When explicitly asked how they felt about the planning tools at their disposal, 11 students made comments that were explicitly positive, while only five gave negative comments (the remainder had no opinion).

That said, further analysis indicates that technology-based products do not fully address the needs faced by BLI/LI students during the course planning and registration process. Recall that students’ maps indicated that on average, students in our study used 3.8 different tools and information sources to plan and register for their courses, with some students relying on up to 7. Students noted that they need to supplement campus-based advising tools with external tools, notably the website ratemyprofessors.com (8 students) and pencil and paper (7 students).

Notably, most of these tools were not program planning tools, indicating that the tools as they currently exist do not provide functionality to meet all of the cognitive and logistical demands faced by students during the course planning process. In other words, because program planning is such a complex task, students need a complexity of information—but that information is not currently housed in available technology tools, thus forcing them to turn elsewhere and introducing even more complexity into their course planning process.

Finding 3: BLI/LI Students Experience Challenges Using Existing Degree Planning Tools

The data collected during the reflective portion of the focus groups provides further evidence that advising and planning tools are not helping BLI/LI students build course plans that help them progress to graduation. When probed, students indicated that the tools have room for improvement and that the available technology, while adequate, can create pain points or challenges. These challenges were also identified by advisors and back-end staff, confirming student perceptions that existing tools are insufficient to accelerate student success.

First, students in our study viewed some of the technology tools not as simple to use, easy to interpret, or as intuitive as they would like. They found that often, information was hidden or opaque, or the visual appearance of the tool was confusing. Eight students indicated that this was a problem for them. Teddy, a Black male student, described his frustration by saying that the tool “is a pie chart. A lot was green so I thought I was done. But if you click something else, there are major requirements. And that was a lot of red.” As a result of misinterpretation like Teddy’s, a number of students ended up making scheduling and registration mistakes that cost them time or money; others indicate that the confusion leads them to use other information sources or resources.

Back-end users confirmed this perception, adding that usability was particularly challenging for students who relied on mobile interfaces, as is common among low-income students. Miranda, who leads a student-facing service unit, explained that:

Some of the systems are not intuitive or not mobile friendly. There are a lot of pop-up windows that do not work on students’ phones. Most if not all students are using their phones and workflows are not designed for mobile. It frustrates them and they need help to complete the tasks on a computer.

Second, students and staff alike find the available advising and planning technology tools “clunky.” Students noted that the tools often take a long time to navigate, do not connect to other tools or information sources, or take a long time to access and use. These complexities go beyond the presentation of information to the functionality of a product itself. Octavia, a Black, first-generation college student who works full time as part of a single-parent household, described using a course scheduling tool by saying that the “whole website bugs out . . . The page won’t work, it sends you back two pages, go back to the class. You have to make sure you press to modify the search or it will redo the whole search.”

College staff confirmed these challenges, often noting that the reliance on multiple systems with slow data transfers led to the “clunkiness” felt by students and, ultimately, inaccurate information. For example, overnight or longer lead times to transfer data between two systems can affect the student experience with registration or create confusion for the student. Julie detailed one example of this, explaining,

The data between the student information system and the degree audit is not always refreshed overnight. It can take 3 or 4 days even . . . They declare it in the SIS system but the degree audit is not updated. It is showing students as undeclared and their courses are not populated properly in the degree audit system.

A third challenge posed by degree planning tools for students in our study related to the language used. New-majority students found that much of the terminology was overly complicated or unfamiliar. As part of the process mapping exercise, we asked students to list words they encountered during planning, registration, and scheduling that were confusing or made it difficult to make good choices. Students listed 25 different “trouble” words, many of which are used in the tools themselves but are not explained to or defined for students. The most common of these were: corequisite, prerequisite, asynchronous, and Writing Intensive. A full list of these words can be found in Appendix D.

Finally, and perhaps most importantly, students found that the information they needed most was not included in the tools, limiting their efficacy. As discussed in the previous section, students take a robust set of considerations into account when planning and scheduling their courses. Much of this information is not available in the institution-provided tools. Students frequently noted that they had trouble finding out if courses were online or in person; accessing information about additional fees; if course sections have already been filled; or specifics regarding the instructor and course expectations. Some students, like Nika, a Black student who works, noted that available information is often outdated.

College personnel concurred with this assessment, though often contextualized this challenge within the constraints faced by institutional technology and data infrastructures. For example, a student success staff member named Hakim noted that at his institution, it can take up to three days for a new course section to be added into a degree planning system. Though such delays may be understandable given the resource constraints faced by broad-access institutions, they still created challenges for students and ultimately reduced the ability of degree planning tools to meet those students’ needs and support their academic progression.

Finding 4: Low-Income Students are Particularly Disadvantaged by Existing Planning Tools

Because we were keen to center the experiences of BLI/LI students, we also analyzed the data with an eye towards differences across self-reported racial and income groups. Students report engaging with technology similarly. Most also indicated that they believe the tools are race-neutral, in that their experiences do not vary based on their race or ethnicity (both in terms of what they report and with regards to answers when explicitly asked if race influences their technology engagement).

However, students did indicate that income status substantially influences their engagement with advising and planning technology tools. Students report that the reliance on technology disadvantages low-income students, particularly because these students are less likely to have consistent internet access, which in turn creates barriers to timely registration. This might seem like a small challenge, but when contextualized within the constraints discussed earlier, it is compounding and therefore substantial. Al, whom we have already met, explained that he has trouble leveraging his priority registration status to get the classes he needs because his internet goes down. His graduation was delayed as a result. He said, [we all] “register at the same time and people with consistent internet are prioritized just by the fact that it’s first-come, first-served.”

The challenges identified in finding 3, in particular, had real-world consequences for the students in our study. As our findings make clear, building a course schedule is, for many BLI/LI students, a complicated puzzle in which they need to fit together academic requirements, work demands, family obligations, and financial realities. Any delay in access to a course schedule or registration opportunity, or any missing piece of information—the very pain points identified by students in finding 3—can create a cascading impact that results in excess stress, delayed graduation, or additional costs.

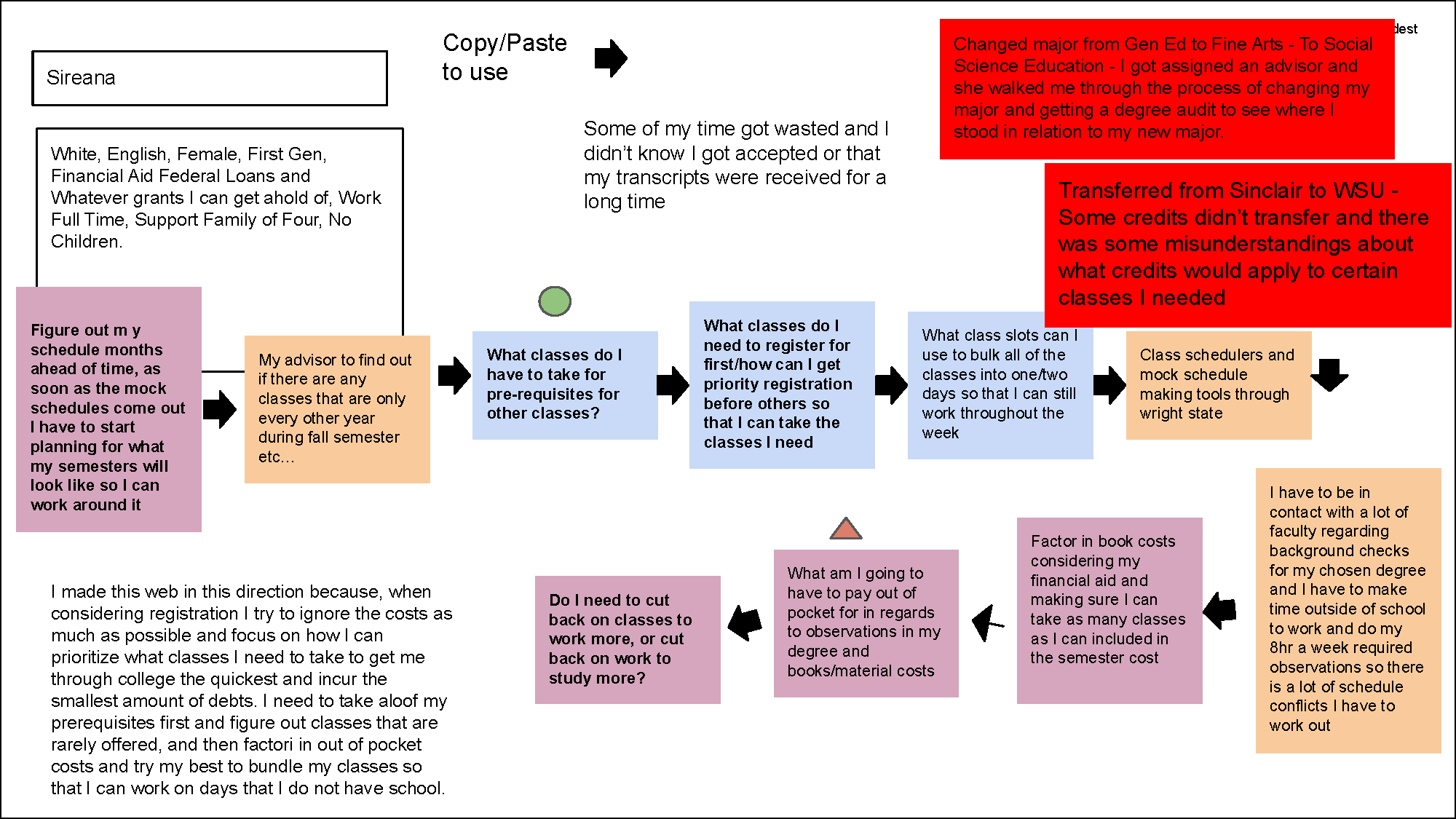

Students are careful to plan course schedules that enable them to balance work and school; if they are unable to register on time, and the class sections they need are filled, it is often impossible for them to select an alternative. Instead, they have to wait until a later semester to take the classes they need. Sireana, a low-income, first-generation college student who works full time to support her family of four, explained that she tries to build a draft schedule as soon as courses are released. “I figure out my schedule months ahead of time, as soon as the mock schedules come out, I have to start planning for what my semesters will look like so I can work around it.” Her map is included in Appendix B.

Valeria, whom we met earlier, explained that she enrolled in a course only to find out that the materials cost extra, and she had to drop it as a result. Jennifer, a Latina first-generation student who took a break from college and has now returned, explained how tools that were difficult to interpret created undue stress and almost waylaid her graduation. She relies heavily on a degree planning and audit tool but did not see a section of the tool that indicated she needed additional courses. She did not find out that she was missing required courses until she applied for graduation—at which point, she had to scramble to find an “alternative credit option” that would enable her to stay on track. With great emotion, she described how, even though she ultimately worked it out, the situation was “stressful.” She emphasized that using the tools is not difficult but “what’s difficult is to know what I do not know. . . . I didn’t know [what was missing or what alternative credits were.] I’m first gen. I don’t have anyone to tell me it’s all right.”

As these examples illustrate, technology pain points were particularly salient for low-income and first-generation3 college students. The students in our study were clear that the technology tools they were expected to use assumed a base level of knowledge that they did not have. Even supposedly simple things, like knowing when to register or what higher education terminology meant, were not simple for those who did not have family or friends to guide them. Mariaumu summed up this point by saying, “You have to know what you’re looking at. [The technology] assumes you know things.” In other words, like with much of higher education, assumptions undergird the resources and technological tools designed to support student success.

Analysis

The findings above illustrate the complexity of the planning process for new-majority BLI/LI students. They also find that current tools do not acknowledge this complexity and in fact, often make it worse. Moreover, students indicate that they need tools that help them manage complexity, particularly if they come from low-income or first-generation backgrounds.

Our findings therefore support our hypothesis that current planning tools have had minimal positive impact on student progression because they do not actually meet student needs. If planning tools are inaccessible, confusing, or not providing necessary information, it would stand to reason that those tools cannot achieve their stated outcome—which is to provide students with a simpler path to fulfilling graduation requirements. In fact, the student maps developed during data collection indicate multiple times that the planning tools themselves got in the way of students’ timely progression. (One example can be found in Valeria’s map in Appendix B, where she was unable to drop a course she had registered for even though she did not need it.) It logically follows that if program planning tools do not meet students’ needs, those tools would not change student outcomes.

To check our conclusion that current tools do not support BLI/LI students’ academic planning and degree progression, we analyzed the data to understand what type of tools would be effective. In other words, if students ask for the tools that already exist, we could not attribute low efficacy levels to those tools. But, if students indicate that features, functionalities, and information not currently included in the tools would be useful, we can once again conclude that existing tools are insufficient.

Thus, we analyzed the reflective focus group notes by coding for instances of student expressions of what they want and/or need in a program planning tool. These instances were typically aspirational (e.g., “it would be nice if . . .”) or critical (e.g., “the tool does not . . .”). In some instances, they were direct responses to a focus group prompt asking what suggestions students would make to improve advising and planning technologies; in others, they were spontaneous feedback.

This analysis confirmed that students want planning and advising tools that are different from what currently exists. They desire tools that help them navigate cognitive complexity as well as the multiple considerations they take into account when building course schedules to stay on track to completion. They also want integrated tools to make planning and scheduling easier, additional information to help their decisions, and a more intuitive user experience. Notably, these suggestions directly speak to the challenges that they articulated when describing their experiences using the tools. In other words, students’ suggestions substantiate our hypothesis that current advising tools may not positively impact student progression because they do not address the real needs of BLI/LI students—that is, they ignore the lived cognitive complexity of course planning and registration that is so salient for these students.

Our analysis reveals three categories of suggestions. First, students desire different information than what is currently available in planning tools. This information would directly speak to the complexity of planning for BLI/LI students. Students want course planning tools to acknowledge the reality that course selection and registration decisions encompass more than whether or not a class meets graduation requirements, helping them take into account myriad other factors including schedule, cost, and quality. Students suggest advising and planning tools include additional information, prioritize transparency, and real-time accuracy.

Students were particularly interested in access to information about instructor quality so that they know who is teaching courses and what to expect from their instructors prior to registration. They currently use ratemyprofessors.com for this purpose but acknowledge it is not trustworthy. “If I’m going to take a class with a new professor I’ve never heard of, I want to make sure that they are a good professor and they have a good work ethic or whatever.” They also want information regarding book costs and lab fees.

Second, students suggested that products and their colleges’ websites should integrate various tools in order to reduce complexity, save them time, and streamline their information sources. This directly speaks to the earlier finding that students often must use a multitude of tools and resources in order to think about, plan, schedule, and register for classes. Jumping back-and-forth between tools takes time and introduces the opportunity to make mistakes—two things the students in our sample did not have the luxury of. To ameliorate this complexity, students wanted a singular planning tool, or a tool with real-time information, often coupled with human reassurance.

Third and relatedly, students wanted tools to further reduce cognitive complexity by increasing “choice architecture”—essentially scaffolding their decision making so that the number of things they have to think about could be reduced while also creating efficiencies of time and effort. At their core, these suggestions reflect the fact that students want to be able to log into their primary student portal and see a message akin to “based on your major, here are your required classes.” To that end, they suggest that planning tools provide suggestions or recommendations of courses an individual student should consider taking next semester. In the tools to which students in this study had access, they were typically given a bucket of remaining requirements and courses but not guided towards which ones would be best to take next semester to improve success rates and time to degree.4 Some students suggested taking this a step further and looking at recommending courses that are similar to past successful courses.

Similarly, students suggested that the degree audit tools could improve their navigation, give a more complete picture of student progress, and enhance search and usability by more clearly identifying the specific courses they still need to take. Students indicated that current degree audits are often difficult to interpret and also do not clarify what specific courses are needed to fulfill various distribution requirements. Often, students have enough credits to graduate but are missing specific course requirements but that is not clear in their degree audit.

Finally, we conducted an analysis of the students’ maps to identify the prevalence of lost time, momentum, or money resulting from existing tools. During the annotation part of the mapping protocol, students were asked to note where they were negatively impacted, and to describe what they lost. Of the 23 completed maps, 13 had indicators that even with the use of planning tools, they had encountered lost time or money, or had a stressful experience. Jennifer’s annotation said, “Missing 7.5 credits for graduation. All major and minor credits were fulfilled or in progress. COST: Stress thinking I would not graduate when [tool] looked like everything was checked off.” BP, who attended a different school, annotated his map to say, “Had to pay back some of my scholarship to pay for the extra course. Exhaustion.”

Taken together, these analyses indicate that current advising and planning technologies do not meet BLI/LI students’ needs and in fact, may be getting in the way of their progression to a degree. Thus, we find further support for our hypothesis that the null effects of these tools found in quantitative literature may stem in large part from the fact that the tools do not meet the needs of BLI/LI students.

Conclusions and Recommendations

New-majority students like Al rely on planning and scheduling tools to help them register for the right courses at the right time and stay on track to a degree. And yet, research to-date shows that current advising, planning, and scheduling products have not improved student progression and completion rates. We undertook this study to understand why this might be the case. We questioned what functions and capabilities are necessary in advising technologies to support the success of BLI/LI students in broad-access institutions. We spoke to students from these groups to understand how they use these tools and what they say they need from them. What we found indicates that one possible explanation is that they do not provide BLI/LI students with the information they need, nor do they reduce the complexity of the planning and registration process.

It is important to remember that these findings come from BLI/LI students themselves—though we triangulated on their perceptions by speaking with institutional actors, our study made student opinions primary. We need to take their voices seriously if the field is to improve advising and planning tools and processes in ways that will actually accelerate BLI/LI students’ momentum towards degree completion. Fundamentally, this study is a call to redesign tools with student voice and needs at the center, addressing the cognitive complexity of the course planning process and including the information that students most say they need.

Most of the recommendations stemming from this study will need to be implemented by technology companies and IT professionals who design and build advising and planning tools. But institutional actors have a role to play, as well, by insisting that product designers build tools that meet student needs, and by using their purchasing power to reward product designers who actually do so. To that end, we have four recommendations to improve advising and planning tools that, given the data presented in this paper, may help them live up to their promise and, eventually, lead to improved student outcomes.

First, as we have noted, products need to be designed for today’s new-majority students—BLI/LI students need software that is easy to use, intuitive, fast, accurate, and has a consistent visual design. Moreover, low-income students especially tell us that their access to technology can be limited, so tools need to be designed to work in multiple modalities that are readily available and have cross-platform compatibility and access (i.e., mobile, desktop, multibrowsers, etc.).

Second, products need to use “real words,” rather than jargon or generic language. We heard from students a long list of words they do not understand clearly. They noted that current planning tools assume that they have background knowledge that many first-generation students in particular do not have and, in absence of this knowledge, tools become useless. To remove ambiguity, the software could have features to provide more context or resources for specific terms/names.

Third, include information that BLI/LI students actually need to make program planning decisions that address the complexities of their lives. Offer more functionalities for students to connect their school and personal schedules, integrate information such as course ratings, and access supports regarding food and housing insecurity. New-majority students need to consider more than graduation requirements when building course schedules; providing them with more robust sets of information can help them address the multifaceted nature of planning and enable them to build course schedules that keep them on track to graduation and balance work, family, and school obligations.

Finally, involve new-majority students in product design and development. Students are experts in their own lives, and as this study shows, can provide robust information to inform “solutions” that address the college completion challenges they face. Centering and honoring their voices will improve advising and planning processes while also supporting product efficacy.

The data and analyses shared in this paper provide insight into why the spread of advising and planning tools has not led to improved student progression and completion. We hypothesized—and the data bore out—that current tools do not meet the needs of BLI/LI students who make up the new majority of higher education students. Not only do our findings provide explanatory power for why current tools have muted efficacy, but they provide a roadmap to improvement by reminding us to include BLI/LI students in product design discussions in order to ensure their needs are truly met.

Author Note

We have no known conflict of interest to disclose.

This study was funded by Stellic via a Bill and Melinda Gates Foundation grant. The findings presented here do not represent the positions of either organization.

The authors thank Brian Mikesell and Sebastian Trolez for research assistance. Representatives from the two colleges included in this study were instrumental in facilitating the work; we thank them for their efforts.

Correspondence regarding this study may be directed to Dr. Melinda Karp.

References

Bailey, T., Jaggars, S. S., & Jenkins, D. (2015). What we know about guided pathways: Helping students to complete programs faster. Community College Research Center, Teachers College, Columbia University. https://ccrc.tc.columbia.edu/publications/what-we-know-about-guided-pathways-packet.html

Belfield, C. R., Jenkins, D., & Fink, J. (2019). Early momentum metrics: Leading indicators for community college improvement. CCRC Research Brief. Community College Research Center, Teachers College, Columbia University. https://ccrc.tc.columbia.edu/publications/early-momentum-metrics-leading-indicators.html

Bharadwaj, P., Shaw, C., Condon, K., Rich, J., Janson, N., & Bryant, G. (2023). Driving toward a degree 2023: Awareness, Belonging, and Coordination. Tyton Partners.

Cannella, G. S., & Lincoln, Y. S. (2012). Deploying qualitative methods for critical social purposes. In S. R. Steinberg & G. S. Cannella (Eds.), Critical qualitative research reader (pp. 104–115). Peter Lang.

Carspecken, P. F. (2012). Basic concepts in methodological theory: Action, structure, and system within a communicative pragmatics framework. In S. R. Steinberg & G. S. Cannella (Eds.), Critical qualitative research reader (pp. 43–66). Peter Lang.

Civitas Learning. (2020). What matters most for equity. Community Insights, 2(1), 1–20. https://info.civitaslearning.com/rs/594-GET-124/images/Civitas%20Learning%20-%20Community%20Insights%20Report%20on%20Equity.pdf

Dietz, A., King, P., & Smith, R. (2008). The process management memory jogger. GOAL/QPC.

Espinosa, L. L., Turk, J. M., Taylor, M., & Chessman, H. M. (2019). Race and ethnicity in higher education: A status report. American Council on Education.

Galanek, J. D., Gierdowski, D. C., & Brooks, D. C. (2018). ECAR study of undergraduate students and information technology, 2018. Research report. ECAR. https://www.educause.edu/ecar/research-publications/ecar-study-of-undergraduate-students-and-information-technology/2018/introduction-and-key-findings

Grama, J. L., & Brooks, D. C. (2018). Trends and technologies: Domain reports. Research report. ECAR. https://www.educause.edu/ecar/research-publications/trends-and-technologies-domain-reports/2018/overview

Kalamkarian, H. S., Pellegrino, L., Lopez, A., & Barnett, E. A. (2020). Lessons learned from advising redesigns at three colleges. Community College Research Center, Teachers College, Columbia University. https://ccrc.tc.columbia.edu/publications/lessons-learned-advising-redesigns-three-colleges.html

Karp, M., Ackerson, S., Cheng, I., Cocatre-Zilgien, E., Costelloe, S., Freeman, B., Lemire, S., Linderman, D., McFarlane, B., Moulton, S., O’Shea, J., Porowski, A., & Richburg-Hayes, L. (2021). Effective advising for postsecondary students: A practice guide for educators (WWC 2022003). National Center for Education Evaluation and Regional Assistance (NCEE), Institute of Education Sciences, U.S. Department of Education. https://ies.ed.gov/ncee/wwc/PracticeGuide/28#tab-summary

Kirkhart, K. E. (2010). Eyes on the prize: Multicultural validity and evaluation theory. American Journal of Evaluation, 31(3), 400–413. https://doi.org/10.1177/1098214010373645

LeBlanc, M. E., Testa, C., Waterman, P. D., Reisner, S. L., Chen, J. T., Breedlove, E. R., Mbaye, F., Nwamah, A., Mayer, K. H., Oendari, A., & Krieger, N. (2023). Contextualizing response rates during the COVID-19 pandemic: Experiences from a Boston-based community health centers study. Journal of Public Health Management and Practice, 29(6), 882–891. https://doi.org/10.1097/PHH.0000000000001785

Mayer, A. K., Kalamkarian, H. S., Cohen, B., Pellegrino, L., Boynton, M., & Yang, E. (2019). Integrating technology and advising: Studying enhancements to colleges’ iPASS practices. Community College Research Center, Teachers College, Columbia University. https://ccrc.tc.columbia.edu/publications/integrating-technology-advising-ipass-enhancements.html

Maxwell, J. A. (2013). Qualitative research design: An interactive approach. Sage.

Means, B., & Neisler, J. (2021). Teaching and learning in the time of COVID: The student perspective. Online Learning, 25(1), 8–27. https://doi.org/10.24059/olj.v25i1.2496

Mertens, D. M. (2015). Research and evaluation in education and psychology: integrating diversity with quantitative qualitative and mixed methods (4th ed.). SAGE Publications.

Nastasi, B. K., & Hitchcock, J. H. (2015). Mixed methods research and culture-specific interventions: Program design and evaluation (Vol. 2). SAGE Publications.

Pellegrino, L., Lopez Salazar, A., & Kalamkarian, H. S. (2021). Five years later: Technology and advising redesign at early adopter colleges. CCRC Research Brief. Community College Research Center, Teachers College, Columbia University. https://ccrc.tc.columbia.edu/publications/technology-advising-redesign-early-adopter-colleges.html

Rossman, D., Alamuddin, R., Kurzweil, M., & Karon, J. (2021). MAAPS advising experiment: Evaluation findings after four years. Ithaka S+R. https://sr.ithaka.org/publications/maaps-advising-experiment/

Rothbaum, J., & Bee, A. (2022). How has the pandemic continued to affect survey response: Using administrative data to evaluate nonresponse in the 2022 Current Population Survey Annual Social and Economic Supplement. Research Matters. https://www.census.gov/newsroom/blogs/research-matters/2022/09/how-did-the-pandemic-affect-survey-response.html

Shaw, C., Atanasio, R., Bryant, G., Michel, L., & Nguyen, A. (2021a). Driving toward a degree 2021: Engaging students in a post-pandemic world. Tyton Partners. https://advising.sonoma.edu/sites/advising/files/copy_of_dtad_research_brief_3_-_engaging_students_in_a_post-pandemic_world.pdf

Shaw, C., Atanasio, R., Bryant, G., Michel, L., & Nguyen, A. (2021b). Driving toward a degree 2021: Equity in advising: Prioritization and practice. Tyton Partners. https://tytonpartners.com/app/uploads/2021/06/D2D21_04_Equity.pdf

Thelin, J. R. (2019). A history of American higher education (3rd ed.). Johns Hopkins University Press.

Velasco, T., Hughes, K. L., & Barnett, E. A. (2020). Trends in key performance indicators among colleges participating in a technology-mediated advising reform initiative. Community College Research Center, Teachers College, Columbia University. https://ccrc.tc.columbia.edu/publications/kpis-technology-mediated-advising-reform.html

Wesley, A., & Parnell, A. (2020). Advising and prior learning assessment for degree completion. Recognition of prior learning in the 21st century. Western Interstate Commission for Higher Education. https://www.wiche.edu/key-initiatives/recognition-of-learning/pla-advising/

Zeidenberg, M. (2012). Valuable learning or “spinning their wheels”? Understanding excess credits earned by community college associate degree completers. Community College Research Center, Teachers College, Columbia University. https://ccrc.tc.columbia.edu/publications/understanding-excess-credits.html

Appendix A: Institution Profiles

|

Institution 1 |

Institution 2 |

|---|---|

|

Midwest region |

Northeast region |

|

Regional 4-year public |

Regional 4-year public; large system |

|

11,000 students |

15,000 students |

|

~25% minority students |

85% minority students |

|

~20% first-generation college student |

Over 50% first-generation college student |

|

Over 40% Pell-eligible |

~55% Pell-eligible |

|

~40% part-time |

Over 35% part-time |

|

~43% 6-year grad rate |

~53% 6-year grad rate |

Appendix B: Student Process Maps

Figure 1. Student Process Map created by a student named Al, a male Caucasian who describes his financial status as extreme poverty.

Figure 2. Student Process Map created by a student named Valeria, a female Chicana student who is ineligible for financial aid due to being undocumented.

Figure 3. Student Process Map created by a student named Sireana, a white female who works full-time and supports a family of four.

Appendix C: Program Planning and Cognitive Complexity

Figure 4. Student Results

Appendix D: “Trouble” Words

- Corequisite

- prerequisite

- Audit

- Bursar

- General education

- hybrid

- major courses

- Not eligible

- Writing intensive

- acronyms

- BA, BS/MS

- LAB

- asynchronous

- “There was this word that basically meant you had to take both classes and I didn’t understand that”

- Synchronous-online class

- matriculated

- Requirement designation

- Regular non-liberal arts

- HEO

- REF

- RFP

- Electives

- Hold

- Registrar

1 All student and advisor names in this paper are pseudonyms.

2 See Appendix C for details on the types of things students think about, steps they take, and resources they access while registration planning.

3 Our study did not explicitly explore first-generation college students’ experiences. However, and as we have noted, many students self-identified as first-generation and this status—which often intersects with race and class—was salient in many of their maps and reflective interviews. Thus, we highlight it here as another important consideration when thinking about ways to foreground the needs of new-majority students.

4 Some planning tools do have this capability, but students in this study either did not know about or did not attend schools with this option.